WEB llama-2-13b-chatggmlv3q8_0bin offloaded 4343 layers to GPU. WEB Some differences between the two models include Llama 1 released 7 13 33 and 65 billion parameters while. WEB A notebook on how to fine-tune the Llama 2 model with QLoRa TRL and Korean text classification dataset. WEB Llama 2 The next generation of our open source large language model available for free for research and. Llama 2 is a family of state-of-the-art open-access large language models released by. The model you use will vary depending on your hardware..

WEB The tutorial provided a comprehensive guide on fine-tuning the LLaMA 2 model using techniques like QLoRA PEFT and SFT to overcome memory and compute limitations. WEB It shows us how to fine-tune Llama 27B you can learn more about Llama 2 here on a small dataset using a finetuning technique called QLoRA this is done on Google Colab. WEB In this blog post we will discuss how to fine-tune Llama 2 7B pre-trained model using the PEFT library and QLoRa method Well use a custom instructional dataset to build a. In this notebook and tutorial we will fine-tune Metas Llama 2 7B Watch the accompanying video walk-through but for Mistral here If youd like to see that notebook instead. WEB To suit every text generation needed and fine-tune these models we will use QLoRA Efficient Finetuning of Quantized LLMs a highly efficient fine-tuning technique that..

Smallest significant quality loss - not recommended for most purposes. Initial GGUF model commit models made with llamacpp commit bd33e5a fd11da7 7. WEB Llama 2 Chat the fine-tuned version of the model which was trained to follow instructions and act as a chat bot. Lets work this out in a step by step way to be sure we have the right answer prompt PromptTemplatetemplatetemplate. If you are on Linux or Mac use the..

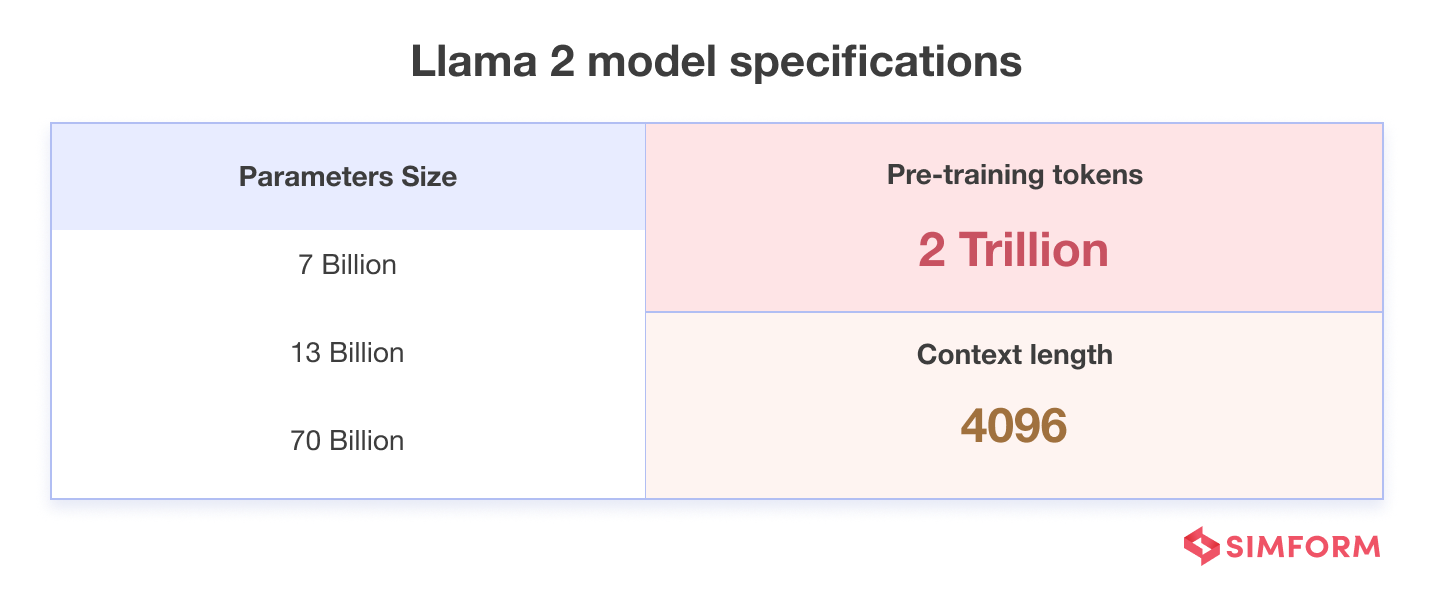

WEB July 18 2023 Abstract In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70 billion parameters. WEB In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70 billion parameters. Open source free for research and commercial use Were unlocking the power of these large language models Our latest version of Llama Llama 2 is now. Introduces the next version of LLaMa LLaMa 2 auto-regressive transformer Better data cleaning longer context length more tokens and grouped-query attention GQA - K and. WEB The abstract from the paper is the following In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7..

Comments